:focal(smart))

Using Python Projects With Pixi

Managing python environments and dependencies is hard, as can be seen by the myriad of python-related package management tools that have sprung up over the years: poetry,conda, micromamba , hatch , pdm, uv etc. These package managers are currently split across the conda and pypi ecosystems. We've felt this pain in the past, where some packages are available on conda, but not on pypi, and vice versa.

With pixi, a package manager for multiple programming languages, we want to unify the two worlds: you can use dependencies directly from the conda (through our library rattler) and pypi (through integration with uv) ecosystems. We hope with pixi to close the gap between conda and pypi, by integrating with them both.

Why Should I Care?

If you are mainly a python developer but are willing to look beyond the PyPI ecosystem, it might be interesting for you to give the conda ecosystem a look, some quick benefits:

Install system dependencies in an isolated environments: e.g SDL, OpenSSL etc.

Manage non-python dependencies, that your project might be using e.g: node, npm, CMake, compilers.

With

system-requirements(Virtual Packages) it becomes clear what "machines" your project can support, e.g that your project requirescudaand that would be locked into your package.pixi add pytorch(will select CPU or GPU (and cuda version) based on system requirements) v.s. something likepip3 install torch --index-url https://download.pytorch.org/whl/cu118

The good thing is with the new changes, you can rely fully on pypi packages using the project structure that you know, while being able to opt-in into the conda ecosystem.

What Pixi Offers

In addition to the latest changes, when using pixi on a python project, you automatically get:

Managing and installation of your python interpreters, per project, for all major platforms.

Locked dependencies per major platform.

Tasks system (with caching) to execute tasks within your project.

Possibility to use multiple environments with different dependencies (e.g python versions, or cuda v.s. non-cuda) and tasks.

What's New?

In the previous releases, there were still things that we did not support that might make the switch to pixi more difficult than it should be. In the last release we’ve added the following features:

Pixi can now use the

pyproject.tomlas its manifest file, no need to usepixi.toml. We plan to support both.Pixi now supports pypi source dependencies, including editable installs.

In the next release pixi will get a much improved pypi

conda map. Which is used to skip installations if the packages is already available in the conda environment.

conda map. Which is used to skip installations if the packages is already available in the conda environment.

Let's dive into the new features:

Pyproject.toml Support

During the creation of pixi we’ve introduced the pixi.toml manifest. We didn't start with pyproject.toml because pixi supports many different programming languages. However, Python developers – even conda users – tend to use a pyproject.toml. With pixi 0.18.0 we are launching our experimental pyproject.toml support!

Existing projects do not need to change, since the pixi.toml takes precedence. All other pixi exclusive functionality is inside the [tool.pixi.*] sections, as should be familiar to pyproject.toml users.

PyPI Source Dependencies

We now support what are called direct url dependencies; these are either direct references to git repositories, wheels or tar.gz files.

For example, with pixi.toml:

requests = { git = "https://github.com/psf/requests.git", rev = "0106aced5faa299e6ede89d1230bd6784f2c3660" }

or with pyproject.toml:

[project] dependencies = ["flask @ git+ssh://git@github.com/pallets/flask@b90a4f1f4a370e92054b9cc9db0efcb864f87ebe"]

and an editable install with `pyproject.toml:

[tool.pixi.pypi-dependencies] # A local path, which installs your project in editable mode. test_project = { path = ".", editable = true }

for the pixi.toml remove the tool.pixi prefix.

The PyPI  Conda Mapping

Conda Mapping

Because a lot of python conda packages are repackaged python projects. They can be used interchangeably as dependencies. Sometimes there are situations, where you already have a conda package installed that can serve as a pypi dependency. For example, you already depend on click as conda package but use the flask PyPI package.

Basically this situation, I’ve used a pixi.toml, in this case, because it makes for a bit shorter example:

[project] name = "test" version = "0.1.0" description = "Testing out pypi and conda" authors = ["Tim de Jager <tim@prefix.dev>"] channels = ["conda-forge"] platforms = ["osx-arm64"] [tasks] # These are conda dependencies [dependencies] # We depend on click as conda dependency click = "*" [pypi-dependencies] # flask also depends on click, as a pypi dependency flask = "*"

In pixi, we proceed to use the conda click package as a dependency for flask . Essentially, overriding the click from pypi. We’ve used the well-known grayskull mapping for this in the past, which already is pretty great: Grayskull

However, one limitation of grayskull mapping is that in order to catch the different naming of packages, you need to manually contribute to a static configuration where you will add this package.

We wanted to take a step further and totally automate this process.

We have analysed more than 1.700.500 packages from conda-forge and we are doing it every hour to keep track for the new ones. Based on the path information from a conda package, we extract directly the associated python name and the version used for it.

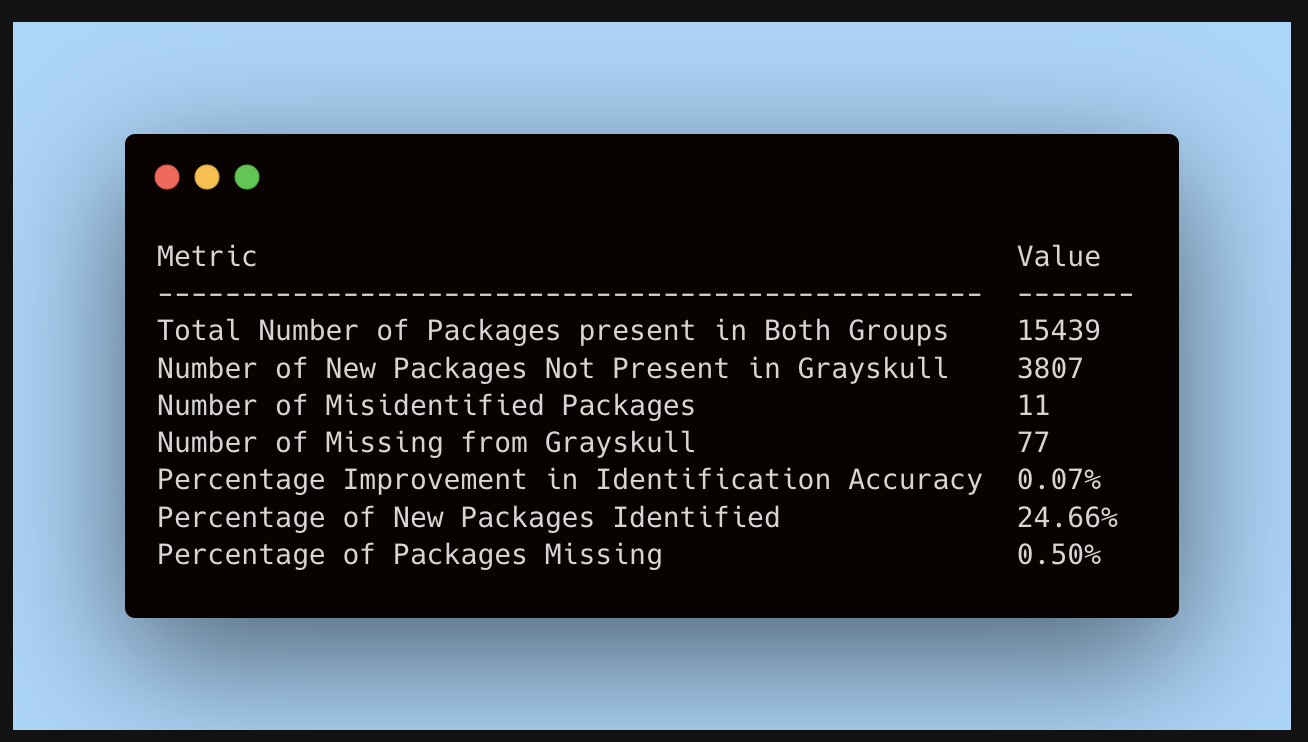

By choosing this approach, we have identified 3807 new packages - redisgraph-py , scikit-geometry, cloudpathlib-s3 to name a few which gave us 24.66% increase in ability to correctly map not only the conda package name to it’s pypi alternative but also it’s version. A benefit of choosing this strategy is that we can use it with any conda channel without the need to manually maintain a list.

Another benefit of this approach is that we can avoid mapping of different packages that share the same name. A good example of this is pandoc edge-case - the conda’s pandoc refers to haskell library which is needed to be installed before using pypi’s pandoc, before the new mapping they were incorrectly recognized as the same package. At pixi we care about developer experience and avoiding any unexpected surprises (we think that the only surprise in your life should be a good espresso  ) so with our new and exhaustive mapping we can correctly install both pandoc’s.

) so with our new and exhaustive mapping we can correctly install both pandoc’s.

If you are interested you can take a look behind the curtains on how we do it here.

Some statistics compared to Grayskull:

Showcase

Let’s show of a complete example with all of the features we’ve mentioned above:

Given the following file tree:

example_project ├── pixi.lock ├── pyproject.toml └── test_project ├── __init__.py └── module.py

We can create a pyproject.toml:

pyproject.toml

[build-system] requires = ["hatchling"] build-backend = "hatchling.build" [project] name = "test_project" version = "0.1.0" requires-python = ">=3.9" dependencies = ["rich"] # We need to know the platforms for the locking per platform # as well as the conda channels, this is where we get the python # executable from [tool.pixi.project] name = "test_project" channels = ["conda-forge"] platforms = ["linux-64"] [tool.pixi.pypi-dependencies] # A local path, which installs your project in editable mode. test_project = { path = ".", editable = true } [tool.pixi.tasks] # A task to run the python module. start = "python -c 'from test_project import module; module.hello()'" [tool.pixi.dependencies] rich = ">=13.7.1,<13.8"

With the following module.

module.py

from rich import print def hello(): print('[italic green]Hello World![/italic green] I am a [bold cyan]Pixi[/bold cyan] installed module.')

To run the example simply run:

pixi run start

This takes care of resolving, installing Conda and PyPI dependencies and executing the start task. Using the pyproject.toml, pixi reads the requires-python and uses that to solve for python interpreter version.

[project] # Will install >= 3.9 from conda-forge requires-python = ">=3.9"

The dependencies in the pyproject.toml are interpreted by pixi as PyPI dependencies.

[project] dependencies = ["rich"] [tool.pixi.dependencies] rich = ">=13.7.1,<13.8"

Specifying the dependency twice for both conda and pypi, will make pixi select the conda dependency by default.

Conclusion

We hope that with this release, we’ve managed to further close the gap between the PyPI and Conda ecosystems. Also, we hope that as a Python developer – either invested or not invested into Conda – we’ve managed to make it more interesting to add pixi to your toolbelt!

Feel free to reach out on our social channels, we love to chat and help with any packaging related problems you are experiencing!

You can reach us on X, join our Discord, send us an e-mail or follow our GitHub.

We want to thank our open source contributors: Olivier Lacroix, did most of the work for the pyproject.toml support – we really want to thank him for his contributions.